Debugging is of course a major part of programming. Arguably most of the time it’s not the most satisfying part of the job, it’s an unfortunate necessity rather than the goal itself. Moreover, I think that most of the bugs I make aren’t interesting (e.g. typos, wrong usage of a library function, nullptr dereferences, off-by-one errors etc.). But sometimes, on rare occasions, one can stumble upon a subtle and sophisticated bug. Resolving such a bug brings true catharsis, gives massive satisfaction and is the ultimate learning experience.

I want to share a story about a man who was staring at the screen with no clue of what was happening, but finished with the great joy of resolving a problem that improved my engineering skills and opened a new dimension of thinking about multithreaded programs. Okay, I know, I got a little bit carried away and perhaps exaggerated. However, I hope that you will feel at least a little impressed by the end of the article.

Context

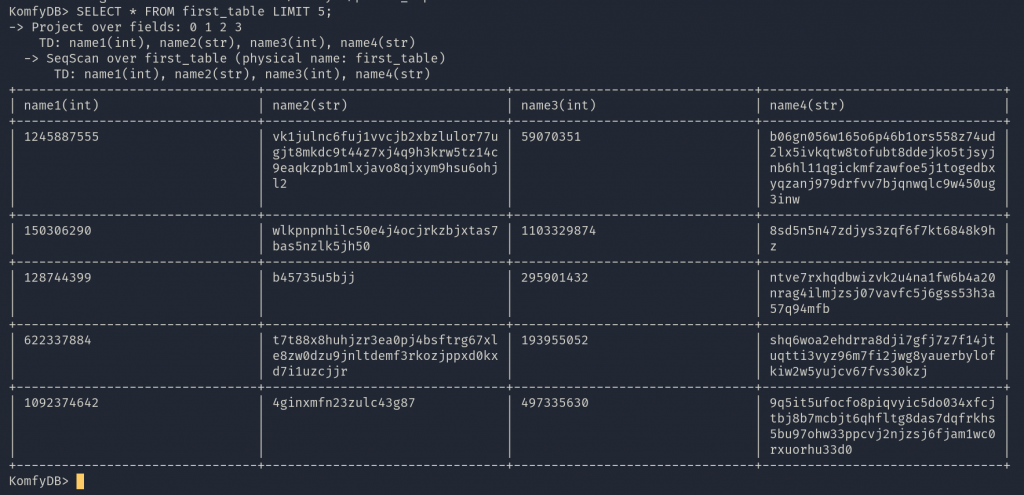

Last semester I started programming a C++ relational SQL database with two of my friends. We named it KomfyDB (check it out on GitHub). It started as a University assignment, but I am treating it very personally and putting extra effort into it. Potentially, I’ll write a separate blog post to talk about my motivation and the project in more detail.

KomfyDB strives to be a full-fledged SQL database. At the moment it supports a small (but decent) subset of the SQL query language and can perform the basic operations. However, we’re aiming to keep it simple, with no advanced features, as the main goal is to have a working database with the most interesting parts implemented. The project has been written from scratch, so there were no tests of any kind prepared for us (which is an unusual situation for a University assignment). Of course, there’s no world in which we’d write a unit test for every function. However, we acknowledge that well-written tests are a necessity in the maintenance of a long-term project. Thus, we’re unit-testing crucial parts of the database and we’re working on a simple framework for end-to-end testing (E2E).

For now, the schema of the e2e test appears simple: Run KomfyDB with no tables and populate it with predefined test data, simultaneously run an SQLite database with the same tables and compare their outputs on various queries. Both databases are run by a Python process that orchestrates, monitors and compares the results of the queries.

Disclaimer. Please bear in mind that all code given in this article is simplified for the sake of clarity. Visit KomfyDB’s repository to see the actual code.

# run_tests.py

def main():

# Instantiate both databases

db1 = SQLiteDatabase()

db2 = KomfyDatabase()

# Instantiate the orchestrator

test_runner = TestRunner(db1, db2)

# Populate databases with predefined data

test_runner.prepare("./testdata/database.sql")

# Run queries and compare the results

test_runner.run_tests(

[ "SELECT * FROM table1", ... ]

)

# test_runner.py

class TestRunner():

...

def run_test(self, query):

result1 = self.db1.execute(query)

result2 = self.db2.execute(query)

if result1 != result2:

assert FalseIt is important to see how the communication between this script and KomfyDB works. We don’t want to write too much boilerplate code in general, so there’s no separate front-end to the database. KomfyDB is a single binary, which takes queries from the standard input (stdin) and outputs the result on the standard output (stdout). Hence, the testing framework simply runs a slightly modified KomfyDB as a subprocess and connects to its stdin and stdout with pipes.

class KomfyDatabase(Database):

def __init__(self):

self.db_process = Popen(

["./komfydb-e2e"],

stdin=PIPE,

stdout=PIPE,

text=True,

)Then, the TestRunner prepares both databases by executing a series of INSERT commands and waits for the execution to finish. After that, the execution of the test queries begins. KomfyDatabase‘s execute function (in the test framework) works as follows:

- Write the query to KomfyDB’s stdin,

- Read the resulting tuples from the stdout,

- Finish reading after receiving

SIGUSR2from KomfyDB.

As I previously mentioned, we want to keep things as simple as possible. A message-based communication requires the usage of an additional library or another boilerplate not-database-related code. On the other hand, the framework doesn’t know before the end of the execution how much output there will be. Also, the pipes are not closed after everything is written, as we possibly want to execute another query and use the same pipe to retrieve the output. Hence, sending a signal after finishing writing all tuples for a given query seemed like a good idea. This is the execute code we ended up with:

# Set up handler for SIGUSR2 that sets the QUERY_DONE_FLAG

QUERY_DONE_FLAG = False

def query_done_handler(signum, frame):

global QUERY_DONE_FLAG

QUERY_DONE_FLAG = True

signal(SIGUSR2, query_done_handler)

class KomfyDatabase():

...

def execute(self, query):

global QUERY_DONE_FLAG

# Reset the flag, it will be set by SIGUSR2 handler,

QUERY_DONE_FLAG = False

# Write query to KomfyDB process

self.write(query)

responses = []

# Non-blocking read until we get the signal

while (response := self.read()) or not QUERY_DONE_FLAG:

if response:

responses.append(response)

# Join what was read and return

return "".join(responses)Unfortunately, Python’s read function does not return with EINTR if a signal comes while waiting for input, so the self.read() function does not block. Otherwise, it would be impossible to check if the QUERY_DONE_FLAG is set after reading the whole output.

I invite you to take a moment and consider the order of the conditions in the while loop. What do you think would be the problem if we switched them? I.e.:

while not QUERY_DONE_FLAG or (response := self.read()):

if response:

responses.append(response)At first glance, it looks as if the code could work. The while loop cycles until the flag is set. When not QUERY_DONE_FLAG is finally false, then for the first time self.read() is executed. The flag is set only after KomfyDB has written all tuples to the stdout, so now everything is read until finally self.read() returns nothing (i.e. there’s nothing left). But what will happen if the output is huge (e.g. millions of bytes)? Remember, the communication passes through pipes, which are buffered, therefore writing to a pipe with full buffer will block. So there we have a deadlock! The code doesn’t read until everything is written and KomfyDB’s process cannot write more than the pipe’s buffer size unless the other side releases the buffer.

Confusion begins

With the testing framework finished we could finally test KomfyDB on bigger tables and complicated queries. Thanks to this I found and fixed bugs that we didn’t catch during manual testing, which was quite satisfying. Then it was time to push the changes to our GitHub repository. This was the moment when the madness began – despite the fact that the tests were successful locally, they failed in the GitHub Actions.

You can probably imagine the discouragement that I felt trying to resolve what was happening. All the information that you get from GitHub Actions is the output of the run commands. To try and fix any problem in the CI it’s necessary to make a change locally, commit it, push it and wait for the CI to finish, which requires setting up a container, downloading necessary tools and building everything. It takes approx. 5 minutes each time until you get the result of the run tests. It is the easiest recipe for madness.

For some reason, the execute in KomfyDatabase didn’t return any output. It certainly got the SIGUSR2 and set the QUERY_DONE_FLAG, as it didn’t run indefinitely, but it didn’t get any response. Our first guess was that there may be an issue running KomfyDB as a subprocess from Python in the CI. Maybe the path to the binary was different for some reason, or perhaps it didn’t load the test tables correctly, maybe the pipes were somehow configured differently in the container. After some investigation, it turned out that not only KomfyDB was running, but it also set up the tables correctly. Moreover, it did output the queries to its stdout, as it was the test runner that didn’t get any input.

I’d like to again emphasize, that everything was perfectly working on our machines. The outputs were exactly as expected and every additional debug information was correct. Almost all of it was also correct in the CI, except for the fact that the Python process didn’t get any input from the stdin.

We finally noticed that rerunning the CI job sometimes, but rarely, finished successfully. This gave the idea that there must be some race condition. However, no matter how much we run the tests locally, they would always be successful and, in contrast, almost always failed in the CI job. This conclusion brought us to the point where we stared at the while loop in the execute function. Have a look at it again:

# Non-blocking read until we get the signal

while (response := self.read()) or not QUERY_DONE_FLAG:

if response:

responses.append(response)Try figuring out what’s wrong here on your own!

Okay, if you finished chewing on that, you can read further. Imagine the following scenario:

- Python process tries to read from the stdin, but there’s nothing, so

(response := self.read())returns the empty string, - The Python process is preempted (i.e. the operating system decides to change the current running process and puts this one to sleep) and KomfyDB gets the CPU.

- KomfyDB runs the whole query (which takes some time) and writes all the tuples to stdout (but no more than the pipe’s buffer size) and only after that sends

SIGUSR2to its parent process (which is the Python test runner). - Python process gets back on the CPU. The first condition of the alternative was false before the preemption, so the second condition is checked. But it’s also false, as the signal was already sent and

QUERY_DONE_FLAGis now set toTrue. The loop finishes and we do not read anything.

Read the 3rd point again. Doesn’t it sound ridiculous? How could an interleaving like this ever happen? Theoretically, it is a race, but realistically it seems unimaginable that the Python process doesn’t get back on the CPU for the whole execution of a possibly big query! Surprise: it is possible.

Michał, co-CEO of KomfyDB Inc., suggested that GitHub runs the CI on one core, but locally we do it on many. So, I tried to run the tests on one core with the taskset command (it’s part of the ‘util-linux’ package). And then it happened – the tests kept failing almost every time I run them.

Lesson learned

First of all, it was extremely satisfying to see the tests failing locally. For most of the debugging process, it seemed that we were trying to figure out some strange GitHub Action’s behaviour, that has no added value for us or the project. It was the complete opposite after all. If not for the CI, we would have probably never found this race condition (or even worse, find it after some time and search for a bug in the database). Also, I think it is much nicer to resolve a bug that was made by a faulty algorithm rather than a silly mistake. In the end, it is the most valuable lesson to be learned from such situations. It extends your understanding of the world.

The fix for our problem may look silly, but I’m pretty sure it is now completely correct and there’s no much cleverer way to do it. The first approach (check flag first, read second) reads everything written to the pipe even if the flag is already set to true, but is subject to a possible deadlock. The second solution prevents any deadlock but allows for the race condition to happen. The final result combines the two previous solutions. The final code looks like this:

while (

(response := self.readline())

or not QUERY_DONE_FLAG

or (response := self.readline())

):

if response:

responses.append(response)To summarise my thoughts on this subject:

- This is the first time I’ve found a race-condition bug in the wild. I am familiar with the concepts of multithreading and its possible troubles, I’ve completed many tasks regarding synchronization during multiple university courses. However, in those cases, I knew what I was looking for and had an idea of where I needed to be the most cautious. Here it happened completely naturally and was not forged in any way.

- You really cannot assume anything about the timeline of a multithreaded system. Although some threads’ interleaving scenarios may sound absurd, they will probably happen. You cannot make optimistic assumptions and think only about a happy path.

- In this particular case, I think that a little extra effort into some more sophisticated communication mechanism would be a better idea. Multithreading is a dangerous territory, so it’s probably best to use existing and verified solutions instead of writing them on their own. However, I don’t regret the decision that much, as we’re starting to work on transactions, so it’s good that we get this experience now.

Final words

If you get to this point then I hope you’ve enjoyed this story and also get some insight from our mistake. If you have any thoughts or comments on our project or on our solution for the E2E testing, then please do not hesitate to reach out or post a comment under this article.

thats a sneaky f*cker

What was the reason for using signals to separate the tuples instead of special characters / more complex output format / prepending the output with byte count?

> Prepending the output with byte count

This couldn’t work, as you do not know the size of the output in the beggining.

> special characters / more complex output format

Yeah, I guess that could’ve been a better approach. I remember we discussed it but went for signals for some reason, I’m not sure why now.